This lecture serves as a philosophically informed mathematical introduction to the ideas and notation of probability theory from its most important historical theorist. It is part of an ongoing contemporary formal reconstruction of Laplace’s Calculus of Probability from his english-translated introductory essay, “A Philosophical Essay on Probabilities,” (cite: PEP) which can be read along with these notes, which are divided into the same sections as Laplace. I have included deeper supplements from the untranslated treatise Théorie Analytique des Probabilités (cite: TA) through personal and online translation tools in section 1.10 and the Appendix (3).

The General Principles of the Calculus of Probabilities

is the state of all possible events.

is an event as element of the state.

1st Principle: The probability of the occurrence of an event  is the number of favorable cases divided by the total number of causal cases, assuming all cases are equally likely

is the number of favorable cases divided by the total number of causal cases, assuming all cases are equally likely

is the derivational system of the state

as the space of cases that will cause different events in the state.

is the derivational system of the state favoring the event

. The order of a particular state (or derivational state-system) is given by the measure (

) evaluated as the number of elements in it.

If we introduce time as the attribute of case-based favorability, i.e. causality, the event

2nd Principle: Assuming the conditioning cases are not equal in probability, the probability of the occurrence of an event  is the sum of the probability of the favorable cases

is the sum of the probability of the favorable cases

3rd Principle: The probability of the combined event ( ) of independent events

) of independent events  is the product of the probability of the composite events.

is the product of the probability of the composite events.

4th Principle: The probability of a compound event ( ) of two events dependent upon each other,

) of two events dependent upon each other,  , where

, where  is after

is after  , is the probability of the first times the probability of the second conditioned on the first having occurred.

, is the probability of the first times the probability of the second conditioned on the first having occurred.

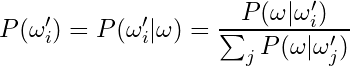

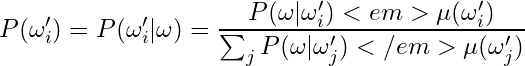

5th Principle (p.15): The probability of an expected event  conditioned on an occurred event

conditioned on an occurred event  is the probability of the composite event

is the probability of the composite event  divided by the a priori probability of occurred event.

divided by the a priori probability of occurred event.

Always, is from a prior state, as can be given by a previous event

. Thus, if we assume the present to be

, the prior time to have been

, and the future time to be

, then the

probability of the presently occurred event is made from

as

The probability of the combined event

Thus,

6th Principle: For a constant event, the likelihood of a cause to an event is the same as the probability that the event will occur. 2. The probability of the existence of any one of those causes is the probability of the event (resulting from this cause) divided by the sum of the probabilities of similar events from all causes. 3. For causes, considered a priori, which are unequally probable, the probability of the existence of a cause is the probability of the caused event divided by the sum of the product of the probability of the events and the possibility (a priori probability) of their cause.

For event , let

be its cause. While

is the probability of an actual existence,

is the measure of the a priori likelihood of a cause since its existence is unknown. These two measurements may be used interchangeably where the existential nature of the measurement is known or substitutions as approximations are permissible. In Principle 5 they are conflated since the probability of an occurred event always implies an a priori likelihood.

- for

constant (i.e. only 1 cause,

),

- for

equally likely,

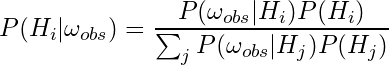

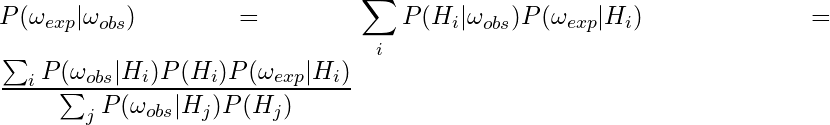

7th Principle (p. 17): The probability of a future event,  , is the sum of the products of the probability of each cause, drawn from the event observed, by the probability that, this cause existing, the future event will occur.

, is the sum of the products of the probability of each cause, drawn from the event observed, by the probability that, this cause existing, the future event will occur.

The present is while the future time is

. Thus, the future event expected is

. Given that

has been observed, we ask about the probability of a future event

from the set of causes

(change of notation for causes).

How are we to consider causes? They can be historical events with a causal-deterministic relationship to the future or they can be considered event-conditions, as a spatiality (possibly true over a temporal duration) rather than a temporality (true at one time). Generally, we can consider causes to be hypotheses

Clearly, Principle 6 is the same as Bayes Theorem (Wassermann, Thm. 2.16), which articulates the Hypotheses as a partition of

in that

(

), in that each hypothesis is a limitation of the domain of possible events. The observed event is also considered a set of events rather than a single ‘point.’ Therefore, Principle 6 says that “the probability that the possibility of the event is comprised within given limits is the sum of the fractions comprised within these limits” (Laplace, p.18).

8th Principle (p.20): The Advantage of Mathematical Hope,  , depending on several events, is the sum of the products of the probability of each event by the benefit to its occurrence

, depending on several events, is the sum of the products of the probability of each event by the benefit to its occurrence

Let be the set of events under consideration. Let

be the benefit function giving a value to each event. The advantage hoped for from these events is:

A fair game is one whose cost of playing is equal to the advantage gained through it.

9th Principle (p.21): The Advantage  , depending on a series of events (

, depending on a series of events ( ), is the sum of the products of the probability of each favorable event by the benefit to its occurrence minus the sum of the products of the probability of each unfavorable event by the cost to its occurrence.

), is the sum of the products of the probability of each favorable event by the benefit to its occurrence minus the sum of the products of the probability of each unfavorable event by the cost to its occurrence.

Let be the series of events under consideration, partitioned into

for favorable and unfavorable events. Let

be the benefit function for

and

the loss function for

, each giving the value to each event. The advantage of playing the game is:

Mathematical Hope is the positivity of A. Thus, if A is positive, one has hope for the game, while if A is negative one has fear.

In generality, is the random variable function,

, that gives a value to each event, either a benefit (

) or cost (

). The absolute expectation (

) of value for the game from these events is:

10th Principle (p.23): The relative value of an infinitely small sum is equal to its absolute value divided by the total benefit of the person interested.

This section can be explicated by examining Laplace’s corresponding section in Théorie Analytique (S.41-42, p.432-445) as a development of Bernoulli’s work on the subject.

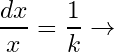

(432) For a \textit{physical fortune} , an increase by

produces a moral good reciprocal to the fortune,

for a constant

.

is the \say{units} of moral goodness (i.e. utility) in that

is the quantity of physical fortune whereby a marginal increase by unity of physical fortune is equivalent to unity of moral fortune. For a \textit{moral fortune}

, [y=kln x + ln h]

A moral good is the proportion of an increase in part of a fortune by the whole fortune. Moral fortune is the sum of all moral goods. If we consider this summation continuously for all infinitesimally small increases in physical fortune, moral fortune is the integral of the proportional reciprocal of the physical fortune by the changes in that physical fortune. Deriving this from principle 10,

is the constant of minimum moral good when the physical fortune is unity. We can put this in terms of a physical fortune,

, the minimum physical fortune for surviving one’s existence – the cost of reproducing the conditions of one’s own existence. With

h is a constant given by an empirical observation of

(433) Suppose an individual with a physical fortune of expects to receive a variety of changes in fortunes

, as increments or diminishings, with probabilities of

summing to unity. The corresponding moral fortunes would be,

Thus, the expected moral fortune

Let

with,

Taking away the primitive fortune

This results in several important consequences. One of them is that the mathematically most equal game is always advantageous. Indeed, if we denote by

Concerning the Analytical Methods of the Calculus of Probabilities

The Binomial Theorem:

Letting

If we suppose these letters are equal

Consider the lottery composed of numbers, of which

are drawn at each draw:\

What is the probability of drawing s given numbers in one draw

?\

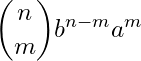

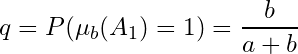

Consider the Urn with

white balls and

black balls with replacement. Let

be n draws. Let

be the number of white balls and

be the number of black balls. What is the probability of

white balls and

black balls being drawn?

expresses the number of cases in which

expresses the number of cases in which

Letting be the probability of drawing a white ball out of single draw and

This is an ordinary finite differential equation:

Three players of supposed equal ability play together on the following conditions: that one of the first two players who beats his adversary plays the third, and if he beats him the game is finished. If he is beaten, the victor plays against the second until one of the players has defeated consecutively the two others, which ends the game. The probability is demanded that the game will be finished in a certain number of of plays. Let us find the probability that it will end precisely at the

th play. For that the player who wins ought to enter the game at the play

and win it thus at the following play. But, if in place of winning the play

he should be beaten by his adversary who had just beaten the other player, the game would end at this play. Thus the probability that one of the players will enter the game at the play

and will win it is equal to the probability that the game will end precisely with this play; and as this player ought to win the following play in order that the game may be finished at the

th play, the probability of this last case will be only one half of the preceding one. (p.29-30)

Let be the random variable of the number of plays it takes for the game to finish.

Let

This is an ordinary finite differential equation for a recurrent process. To solve this probability, we notice the game cannot end sooner than the 2nd play and extend the iterative expression recursively,

The probability the game will end at latest the

Appendix: The Calculus of Generating Functions

In general, we can define the ordinary finite differential polynomial equation. For a particular Event, , its probability density function over internal-time steps

is given by the distribution

. The base case (

) of the inductive definition is known for the lowest time-step,

, as

, while the iterative step (

) is constructed as a polynomial function

![Rendered by QuickLaTeX.com \[P(\omega)=P(\Omega=\omega)=\frac{|\Omega_{\omega}'|}{|\Omega'|}=\frac{m}{n}\]](https://www.mathacademytutoring.com/wp-content/ql-cache/quicklatex.com-7934a13cf4845a815b3a965e3178c179_l3.png)

![Rendered by QuickLaTeX.com \[P(\omega)=P\bigg(\Omega(t_1)=\omega \bigg| \Omega(t_0)\bigg)=\frac{|\Omega_{\omega}'(t_1 | t_0)|}{|\Omega'(t_1 | t_0)|}=\frac{m}{n}\]](https://www.mathacademytutoring.com/wp-content/ql-cache/quicklatex.com-cfdfc6ef10e14817e1507b1ac5bfd3bc_l3.png)

![Rendered by QuickLaTeX.com \[P(\omega)=\sum_j P(\omega_{i_j}')\]](https://www.mathacademytutoring.com/wp-content/ql-cache/quicklatex.com-535328d44c68dc42fb2946a4e2f72a01_l3.png)

![Rendered by QuickLaTeX.com \[P(\omega_1 \cap \cdots \cap \omega_n) = \prod_i P(\omega_i)\]](https://www.mathacademytutoring.com/wp-content/ql-cache/quicklatex.com-c42454fbb36a66da87687fec613457f9_l3.png)

![Rendered by QuickLaTeX.com \[P(\omega_1|\omega_0)=\frac{P(\omega_0 \cap \omega_1)}{P(\omega_0)}\]](https://www.mathacademytutoring.com/wp-content/ql-cache/quicklatex.com-f0b288dbfe299c7dd928583588eaecd0_l3.png)

![Rendered by QuickLaTeX.com \[P(\omega_0)=P(\omega_0 | \omega_{-1})=P\bigg( \Omega(t_0)=\omega_0 \bigg | \Omega(t_{-1})=\omega_{-1} \bigg)\]](https://www.mathacademytutoring.com/wp-content/ql-cache/quicklatex.com-ff4e1c08816826def4444d3d54b152fc_l3.png)

![Rendered by QuickLaTeX.com \[P(\omega_0 \cap \omega_1)=P(\omega_0 \cap \omega_1 | \omega_{-1})=P\bigg(\Omega(t_0)=\omega_0 \bigcap \Omega(t_1)=\omega_1 \bigg| \Omega(t_{-1})=\omega_{-1} \bigg)\]](https://www.mathacademytutoring.com/wp-content/ql-cache/quicklatex.com-869cba02f77d250e3868c112bd575fbe_l3.png)

![Rendered by QuickLaTeX.com \[P(\omega_1|\omega_0)=P\bigg( (\omega_1|\omega_0) \bigg | \omega_{-1} \bigg)=\frac{P(\omega_0 \cap \omega_1 | \omega_{-1})}{P(\omega_0 | \omega_{-1})}\]](https://www.mathacademytutoring.com/wp-content/ql-cache/quicklatex.com-3121a246929db4f7f0f52d68b563b115_l3.png)

![Rendered by QuickLaTeX.com \[P(\omega_1|\omega_0)=\sum_i P(\omega_1^{(i)} | \omega_0)*P(\omega_1 | \omega_1^{(i)})\]](https://www.mathacademytutoring.com/wp-content/ql-cache/quicklatex.com-8da7d0b7270eacbfe541436b5447b38e_l3.png)

![Rendered by QuickLaTeX.com \[A(\omega)=\sum_i B(\omega_i)*P(\omega_i)\]](https://www.mathacademytutoring.com/wp-content/ql-cache/quicklatex.com-15568740afda1426034b83f7e168eacf_l3.png)

![Rendered by QuickLaTeX.com \[A(\omega)=\sum_{i: \omega_i \in \omega^+} B(\omega_i)P(\omega_i) - \sum_{j: \omega_j \in \omega^-} L(\omega_j)P(\omega_j)\]](https://www.mathacademytutoring.com/wp-content/ql-cache/quicklatex.com-2fa0f538113401b99cb1b40bc3500cff_l3.png)

![Rendered by QuickLaTeX.com \[E(\omega)=\sum_i X(\omega_i)*P(\omega_i)\]](https://www.mathacademytutoring.com/wp-content/ql-cache/quicklatex.com-ea123eb8910c805ad6b3c038d3b21395_l3.png)

![Rendered by QuickLaTeX.com \[dy=\frac{kdx}{x}\]](https://www.mathacademytutoring.com/wp-content/ql-cache/quicklatex.com-4d5d839bd6e96bdab479376830791f0a_l3.png)

![Rendered by QuickLaTeX.com \[\int dy = y = \int \frac{kdx}{x} = k \int \frac{1}{x} dx = kln(x) + C\]](https://www.mathacademytutoring.com/wp-content/ql-cache/quicklatex.com-006279f9bfd4ea15c48ec14693f18494_l3.png)

![Rendered by QuickLaTeX.com \[y=\int_{x_0}^x dy =\int_{x_0}^x \frac{kdx}{x}=kln(x) - k ln(x_0)=kln(x) - k ln\bigg(\frac{1}{\sqrt[k]{h}}\bigg)=kln(x) + ln(h) \]](https://www.mathacademytutoring.com/wp-content/ql-cache/quicklatex.com-253d0377c969453468fdcbf9c40a72fc_l3.png)

![Rendered by QuickLaTeX.com \[(x+y)^n=\sum_{k=0}^n \binom{n}{k}x^{n-k}y^k\]](https://www.mathacademytutoring.com/wp-content/ql-cache/quicklatex.com-ef6d8b6b45a52b13039d3ed1b3061b97_l3.png)

![Rendered by QuickLaTeX.com \[(1+y)^n=\sum_{k=0}^n \binom{n}{k}y^k=\sum_{k=1}^n \binom{n}{k}y^k + 1\]](https://www.mathacademytutoring.com/wp-content/ql-cache/quicklatex.com-cd3953a37f524735f62d8d86a8d13657_l3.png)

![Rendered by QuickLaTeX.com \[\prod_{i=1}^n (1+a_i) \ -1 = \sum_{k=1}^n \prod_{l=1}^{l=k}a_k a_l\]](https://www.mathacademytutoring.com/wp-content/ql-cache/quicklatex.com-fe35916925118fcc4bafb3cbd29a39df_l3.png)

![Rendered by QuickLaTeX.com \[P(Y \in X)=\frac{\binom{n}{n-s}}{\binom{n}{r}}=\frac{\binom{r}{s}}{\binom{n}{s}}\]](https://www.mathacademytutoring.com/wp-content/ql-cache/quicklatex.com-4452b8757a56f83e19ebae33387870bc_l3.png)

![Rendered by QuickLaTeX.com \[P\bigg(\mu_w(A_n) = m \ \& \ \mu_b(A_n)=n-m\bigg)=P^n_m=?\]](https://www.mathacademytutoring.com/wp-content/ql-cache/quicklatex.com-53e86294f8b6b6f8af93a754aa7bef26_l3.png)

![Rendered by QuickLaTeX.com \[P^n_m=\frac{\binom{n}{m}b^{n-m}a^m}{(a+b)^n} \]](https://www.mathacademytutoring.com/wp-content/ql-cache/quicklatex.com-99703c4392acb4816318d25f9730dd17_l3.png)

![Rendered by QuickLaTeX.com \[P^n_m=\binom{n}{m}q^{n-m}p^m\]](https://www.mathacademytutoring.com/wp-content/ql-cache/quicklatex.com-eb2b2cb9d5097feef480929e642dc57a_l3.png)

![Rendered by QuickLaTeX.com \[\Delta P^n_{m}=\frac{P^n_{m+1}}{P^n_{m}}=\frac{(n-m)p}{(m+1)q}\]](https://www.mathacademytutoring.com/wp-content/ql-cache/quicklatex.com-11c2370394c67b470a89a3c0ddd5ab2a_l3.png)

![Rendered by QuickLaTeX.com \[{\Delta}^r P^n_{m}= \frac{P^n_{m+r}}{P^n_{m}}=\frac{p^{r}}{q^{r}}\prod_{i=0}^{r-1}\frac{n-m-i}{m+i+1}\]](https://www.mathacademytutoring.com/wp-content/ql-cache/quicklatex.com-eae4e7cd17c3a87d64642cf6ceb55d18_l3.png)

![Rendered by QuickLaTeX.com \[\mathbb{P}(E=n)=\frac{1}{2}\mathbb{P}(E=n-1)\]](https://www.mathacademytutoring.com/wp-content/ql-cache/quicklatex.com-7b449d49ebee54b19d67e4250edc0461_l3.png)

![Rendered by QuickLaTeX.com \[\mathbb{P}(E=n)=\bigg(\frac{1}{2}\bigg)^{n-2}\mathbb{P}(E=2)\]](https://www.mathacademytutoring.com/wp-content/ql-cache/quicklatex.com-e8d3215c5f9265fe6364e4818a06d961_l3.png)

![Rendered by QuickLaTeX.com \[\mathbb{P}(E=n)=\bigg(\frac{1}{2}\bigg)^{n-1}\]](https://www.mathacademytutoring.com/wp-content/ql-cache/quicklatex.com-08e1c37d5af340d839736c80f7471828_l3.png)

![Rendered by QuickLaTeX.com \[\mathbb{P}(E\leq n)=\sum_{k=2}^n \bigg(\frac{1}{2}\bigg)^{k-1} = 1 - \bigg(\frac{1}{2}\bigg)^{n-1}\]](https://www.mathacademytutoring.com/wp-content/ql-cache/quicklatex.com-57e757ff9d59c0d066cf93ba59d062b4_l3.png)

![Rendered by QuickLaTeX.com \[\rightarrow f(n)=\underbrace{\mathcal{P}(\cdots\mathcal{P}(}_{n}f(0))\cdots)\]](https://www.mathacademytutoring.com/wp-content/ql-cache/quicklatex.com-cfa05f229bbf9bbaa84eafc14a12008a_l3.png)